Are you struggling to understand what variance and standard deviation are all about? Don’t worry, I’m here to help! In this article, I’ll explain these statistical concepts in a way that’s easy to understand, without using any confusing jargon.

Variance and standard deviation: what are they?

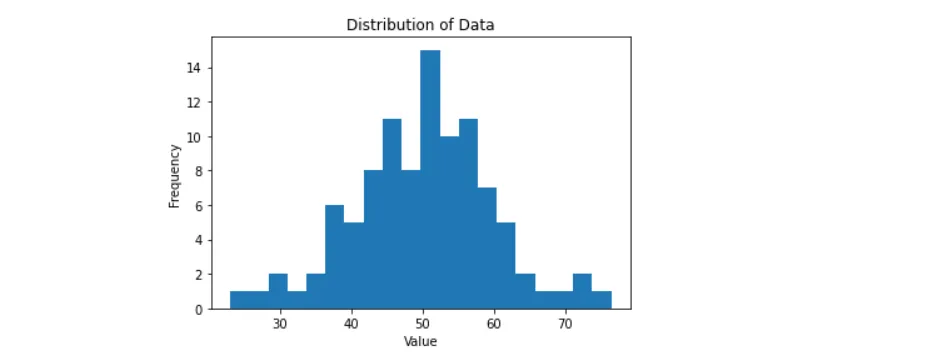

Variance and standard deviation are both measures of variability in a set of data. Variance is a numerical value that tells us how spread out the data is. It measures the average of the squared differences from the mean. The standard deviation is the square root of the variance and is often used as a measure of how much the data deviates from the mean.

How to Calculate Variance and Standard Deviation

Calculating variance and standard deviation can seem daunting, but it’s actually not too difficult. Here are the formulas:

Variance:

For a population: σ² = Σ(x — μ)² / N

For a sample: s² = Σ(x — x̄)² / (n — 1)

Standard deviation:

For a population: σ = sqrt(σ²)

For a sample: s = sqrt(s²)

Don’t worry if you don’t understand these formulas yet. We’ll break them down and explain what each term means.

Population vs Sample Data

It’s important to know the difference between population and sample data when calculating variance and standard deviation. Population data includes all of the possible values in a group, while sample data only includes a subset of that group.

To calculate the variance and standard deviation of population data, we use the first set of formulas above (with σ and N). For sample data, we use the second set of formulas (with s and n-1).

Why Variance and Standard Deviation Matter

Variance and standard deviation are important because they help us understand how spread out or variable a data set is. This information can be useful in making decisions or drawing conclusions about the data.

For example, let’s say you’re comparing the sales performance of two stores. Store A has an average daily sales of $1000 with a standard deviation of $100, while Store B has an average daily sales of $1200 with a standard deviation of $200. Although Store B has higher average sales, it also has a higher degree of variability, which means there’s more risk associated with its sales performance. Store A, on the other hand, maybe a more reliable performer, with less variability in its daily sales.

Real-World Case Study

One real-world example where variance and standard deviation were used to make a decision is in the field of finance. In portfolio management, investors use variance and standard deviation to measure the risk associated with different investments.

For instance, let’s say you’re a portfolio manager who’s considering investing in two stocks. Stock A has an expected return of 10% with a standard deviation of 15%, while Stock B has an expected return of 8% with a standard deviation of 10%. While Stock A has a higher expected return, it also has a higher degree of variability, which makes it riskier to invest in. Stock B, on the other hand, has a lower expected return but also a lower degree of variability, which makes it a less risky investment.

Conclusion

In conclusion, variance and standard deviation are important tools in statistics that help us measure the variability in a data set. They’re calculated differently for population and sample data and can be useful in making informed decisions. By understanding these concepts, we can gain insights into the nature of variability in data and use that information to make better decisions. If you have any questions, feel free to ask!